- Published on Wednesday, May 14, 2025, last updated

Key Metrics in Code Collaboration: Insights on Commit Frequency, PR Reviews, and Merge Efficiency

, and the health of that motion shows up in how they collaborate on code. In this guide we break down three critical, measurable levers—commit frequency, pull request reviews, and merge efficiency—and show you concrete ways to tighten feedback loops, cut waste, and raise quality. Each section is backed by research from GitHub’s Octoverse, DORA’s (DevOps Research and Assessment) studies, and field data from high-performing engineering orgs.

Table of Contents

Measuring Code Collaboration: Why and How?

Metrics bring clarity. By tracking the right signals you spot bottlenecks early and avoid invisible drag on delivery. Start with three questions:

- Are we committing often enough to stay in sync?

- Do our pull request reviews surface issues quickly and share knowledge?

- How smoothly do changes land once they are approved?

Answering those questions requires hard numbers, not gut feel. Git analytics platforms, your CI server, and lightweight dashboards can surface GitHub code review metrics, cycle times, and failure trends without extra manual work. These analytics will then help you implement code review best practices.

“What gets measured gets improved—and what gets shared gets adopted.”

—Lisa Holt, VP of Engineering, OptiDeploy

Commit Frequency: Balancing Speed and Stability

A steady stream of small commits keeps work visible and integration pain low. In GitHub’s 2024 enterprise sample, elite teams averaged 4 commits per developer per day with a median pull request lifetime under two hours—proof that high commit velocity and quality can coexist.

The Impact of Commit Frequency on Code Quality

Frequent vs. infrequent commits: striking the right balance

Frequent commits reduce merge conflicts and enable faster feedback. Infrequent, “big-bang” commits often hide defects and overwhelm reviewers.

Risks of excessive small commits vs. large, infrequent commits

- Too many trivial commits may bury meaningful context.

- Large infrequent commits delay detection of integration issues and inflate pull request cycle time.

Best Practices for Effective Commit Strategies

Writing meaningful commit messages

A concise subject plus a why-focused body improves future debugging. For standards, see our in-depth guide on .

Using atomic commits to improve readability and debugging

An atomic commit implements one logical change; reverting it never breaks the build. This practice simplifies cherry-picks and bisects.

How team size and project type influence commit frequency

Micro-service repositories favor many small commits. Monoliths or regulated domains may batch changes more. Measure, review, and agree on thresholds.

Common Issues with Commit Frequency

Overcommitting vs. undercommitting: finding the optimal cadence

Watch the ratio of commits to lines changed. A spike can indicate “commit spam,” while a drought signals siloed development.

The dangers of unreviewed rapid commits in collaborative environments

Rapid, unreviewed pushes on shared branches can break builds and erode trust. Use protected branches to require pull request reviews before merge.

Tip — Use automated to nudge contributors when daily commits drop below target.

Pull Request Reviews: Ensuring Code Quality and Knowledge Sharing

Reviews are the guardrails of shared ownership. Strong review culture hinges on clear guidelines and lightweight process—not heavyweight ceremony.

What Makes a PR Review Effective?

- Clear purpose: bug fix, feature, or refactor.

- Complete context: linked ticket, screenshots, test plan.

- Constructive tone: request changes with clear reasoning.

Key PR Review Metrics to Track

Review Time: How long does it take to review and approve PRs?

Aim for under one working day; faster for critical fixes.

Review Depth: Are reviewers catching potential issues or approving too quickly?

Track comment density; pair it with defect-escape rate for context.

Number of Reviewers per PR: Finding the balance between collaboration and bottlenecks

One knowledgeable reviewer often suffices; extra eyes for security-critical code.

Best Practices for Efficient PR Reviews

Establishing clear PR review guidelines

Document expectations in a CONTRIBUTING.md and link it from your policy.

Using automated tools for linting, testing, and security checks

Shift rote checks to CI so humans focus on logic. This trims code review metrics like time to first comment.

Encouraging constructive feedback and knowledge-sharing

Rotate reviewers to spread expertise and curb overload.

Data point: Teams with automated linters saw a 32 % drop in review iterations on average (GitHub Octoverse 2024).

Extra Resources

Merge Efficiency: Speed vs. Stability in Code Integration

Merge efficiency means how fast and safely approved code gets added to the main project.

Key Merge Metrics to Monitor

Merge Time: How long does it take for a PR to get merged after approval?

Low friction pipelines merge within minutes; >24 h signals manual gates or flaky tests.

Merge Conflicts: How frequently do conflicts occur, and how are they resolved?

Track conflict rate per branch age. High conflict counts mean stale branches or insufficient rebasing.

Failed vs. Successful Merges: Tracking rollback rates and post-merge issues

High rollback frequency often links back to poor test coverage or skipped reviews.

Best Practices for Improving Merge Efficiency

Keeping branches updated to minimize conflicts

Encourage daily rebase or use bots that auto-update.

Using feature flags and continuous integration (CI) to reduce merge risks

Flags decouple deployment from release; CI enforces quality gates.

Establishing branch protection rules without slowing down development

Enforce tests + approvals only on critical branches; keep auxiliary branches lightweight.

Common Issues in Merge Efficiency

Why some teams experience slow merges and how to speed them up

Symptoms: long queue, manual QA sign-off, or nightly deploy windows. Solutions: parallel environments, merge queues, automated smoke tests.

Handling merge conflicts effectively to avoid delays

Use IDE conflict helpers, set max branch age, and invest in pairing sessions for hairy conflicts.

Preventing broken builds and post-merge failures

Ruthlessly quarantine flaky tests, and treat failed main builds as fire-drills to protect the developer workflow.

Axolo is a Slack app to help techteams review pull request seamlessly

Better Automation with Code Collaboration Tools

Modern code collaboration tools remove drudgery so developers stay focused on value. Four categories matter most:

CI/CD pipelines for smoother code integration

Automate build, test, and deploy so every push triggers a full safeguard net. Fast pipelines correlate strongly with lower pull request cycle time and are now considered code review best practices.

Git analytics tools to track key metrics

Dashboards aggregating GitHub code review metrics, commit trends, and failure rates provide targets everyone can see. Some solutions live directly inside your , merging alerts with chat for real time code collaboration.

Optimizing Code Collaboration for High-Performing Teams

Metrics without action are trivia. Routinely review dashboards, celebrate wins, and adjust constraints where pain persists. Here’s a quick checklist:

- Set baselines. Capture current commit frequency, median review time, and merge efficiency.

- Automate the obvious. Linters, unit tests, and merge queues buy you hours each week.

- Iterate policies. Treat guidelines as living docs. Gather retro feedback and refine.

- Invest in people. Rotate ownership, mentor juniors, encourage pair-programming to multiply effect.

- Leverage developer collaboration tools—from live-share IDEs to async video—for distributed teams.

“Continuous improvement in collaboration metrics translates directly to customer-visible velocity.”

—DORA 2024 Accelerate Report

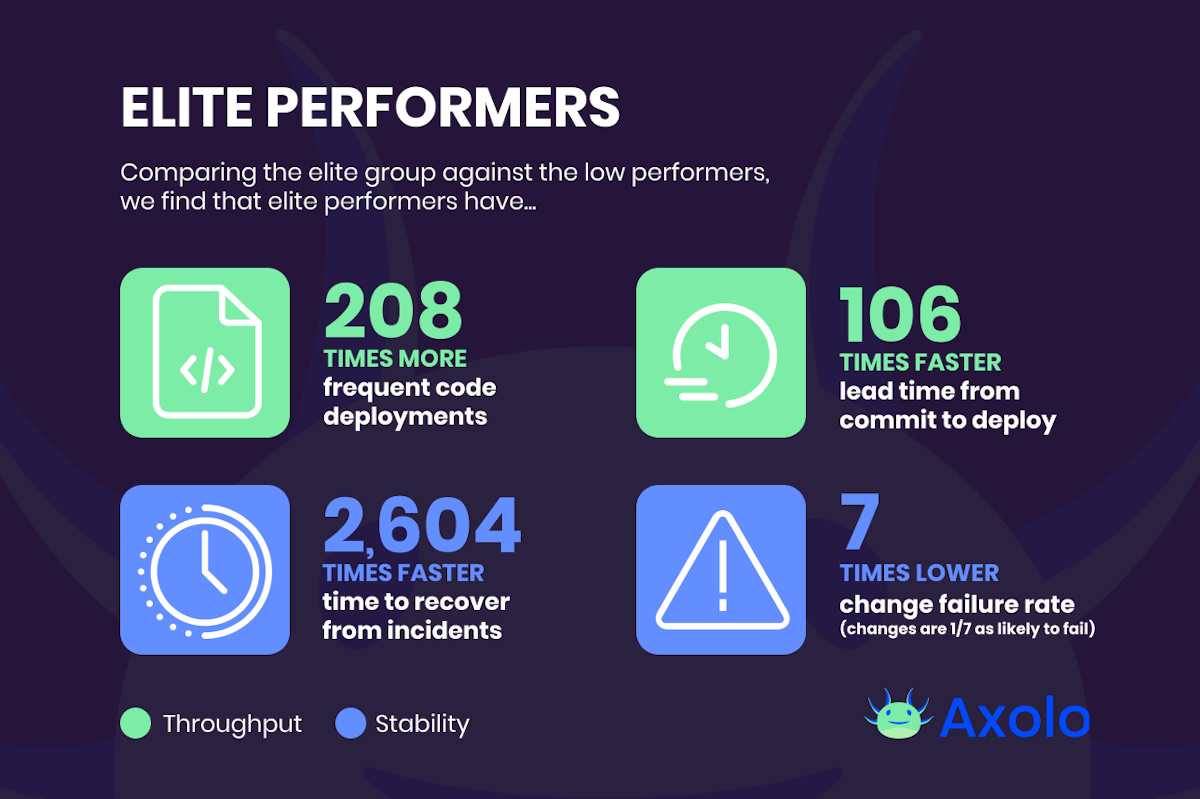

And if you wish to learn more about the DORA Metrics, you can find our dedicated articles: How to implement the Four Key Accelerate DevOps Metrics.

How Axolo help Engineering Teams Optimise Key Metrics in Code Collaboration

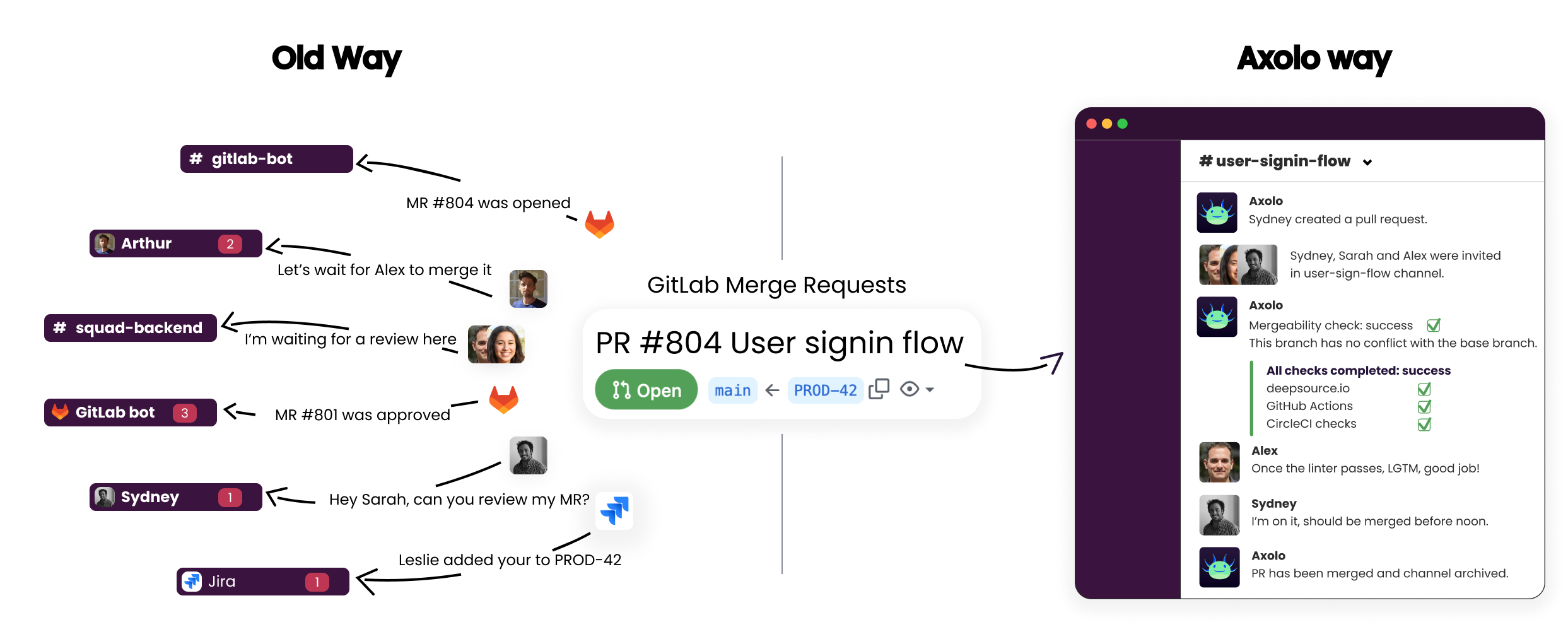

Axolo plugs directly into GitHub/GitLab and Slack, turning both into a single code collaboration hub. Each new PR spins up a focused Slack channel, giving your team real time code collaboration on diffs, inline comments, and CI status without context-switching. By pushing pull request reviews to the right people immediately, Axolo trims pull request cycle time and improves merge efficiency.

Built-in reminders enforce code review best practices —no more stale PR, boosting reviewer responsiveness and keeping commit frequency steady. Axolo’s dashboard aggregates GitHub code review metrics such as review latency, comment depth, and approval patterns so you can spot bottlenecks early and refine pull request best practices. All metrics are exportable for deeper analysis alongside your existing developer collaboration tools.

If you need a pragmatic way to raise code review metrics and accelerate delivery, Axolo’s targeted notifications and analytics deliver measurable gains in weeks, not quarters

Axolo User Experiences

2480+ developers online

Finally, embrace pull request best practices, cultivate code review best practices and implement code collaboration tools so trust and autonomy replace red tape. When metrics trend in the right direction, you ship faster with fewer defects—and developers stay in flow, doing what they love: .